2022

Project Context Cyberith GmbH

Roles Motion and interaction analytics, rapid prototyping, Unity development, user researcher

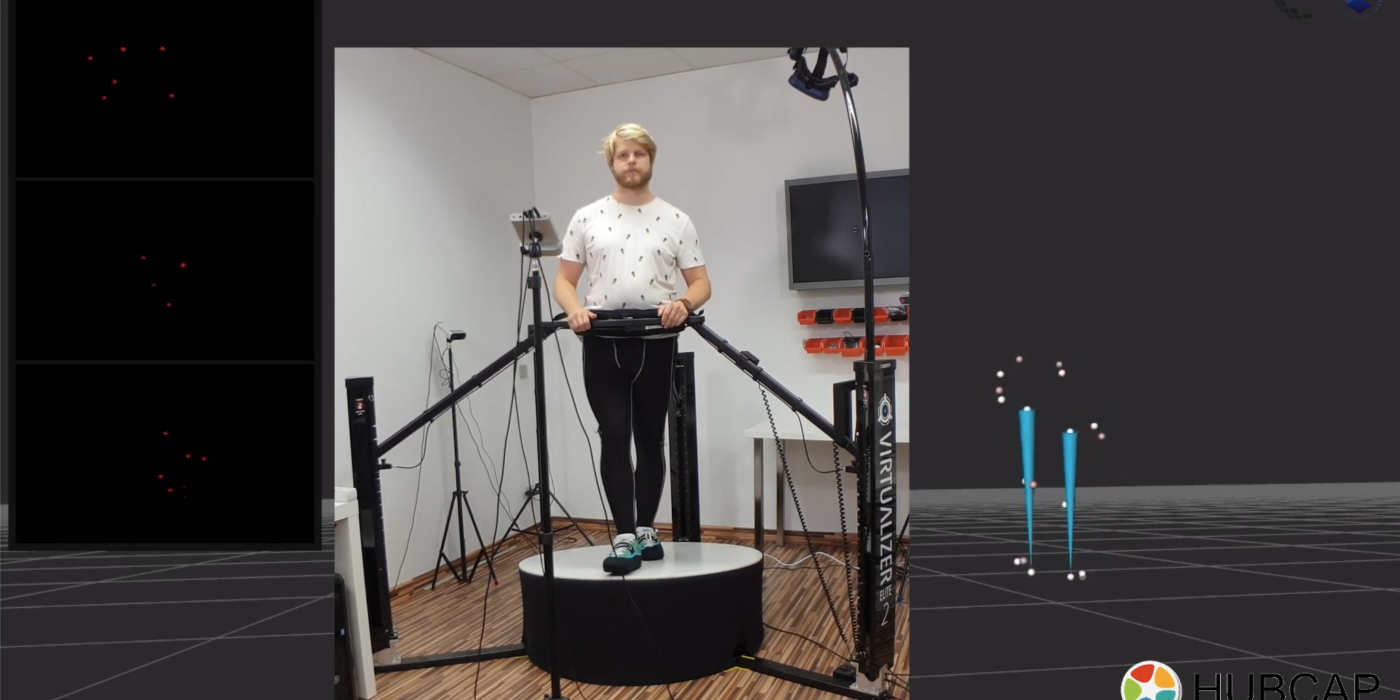

Challenge In a combined project of Cyberith and Codewheel, we worked remotely from each other on the goal of improving the walking experience on the Virtualizer of Cyberith in virtual reality (VR). We looked at how the live data from a motion capturing system could be used to make physical and virtual adjustments to the walking experience.

Result We built a functional prototype that could record the real-time position of the feet. The prototype used the data to adapt the movement in VR and physical motion of the baseplate under the user’s feet.

Details

The Virtualizer ELITE 2 of Cyberith provides a smooth walking experience, even though it uses relatively limited sensor data. For the usual VR experience, getting the movement speed and the direction of the user is all the system needs. Nevertheless, with the team at Cyberith we were curious to see what improvements we could bring to the walking experience if we could capture the exact location and speed of the user’s feet.

We worked together with Codewheel on the motion capture. During the project they developed and perfected the software that we could use for getting the position of the feet in real-time. On our side, I worked on brainstorming and developing new types of walking interactions with the data provided by the system of Codewheel. This meant changing the way that the baseplate of the Virtualizer reacts to the user walking and developing different methods of translating the motion capturing data to movement of the user in VR. To find areas for improvements, I analysed the position and speed data of the feet. This helped me to find certain walking patterns and the effect of our prototype on these patterns.

Finding the right solution was done in an iterative manner. I used Unity to make small changes in the code related to virtual movement or the motion of the Virtualizer plate. Then I immediately tested it while recording positional data of the feet. By analysing the data and creating graphs, I could immediately zoom in on the effect of the code changes to the walking. The new insights I gained, could then be applied by new changes in the code. Rinse and repeat. In-between I also tried out the changes with colleagues at Cyberith.

The final prototype improved the walking experience for users by applying dynamic smoothing to the virtual movement speed based on the real-time position and speed of the feet. This meant that unnatural virtual decelerations were removed. Consequently, making the movement feel smoother in VR. Furthermore, with the extra data we were able to better and quicker detect the intention of a user to walk backwards as compared to our original system. I prepared and performed a small user study to validate our prototype.